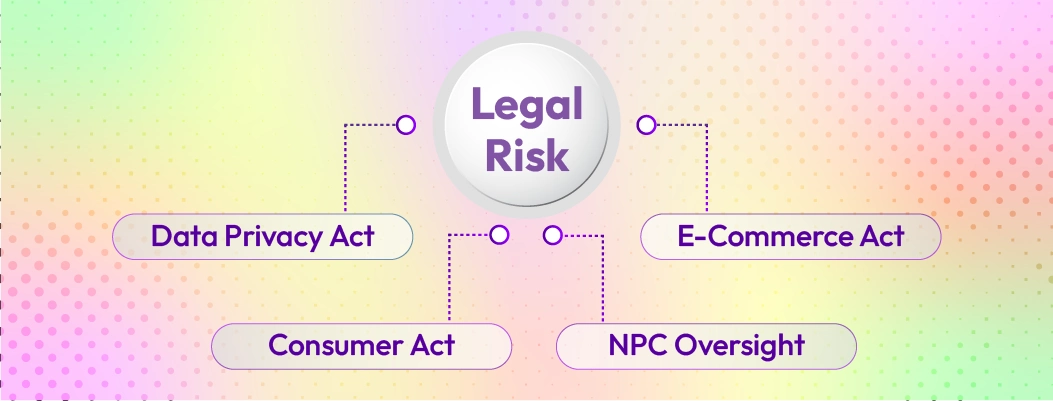

The Philippines confronts various significant legal hazards when employing AI chatbots, requiring comprehension of these dangers to steer clear of breaches, fines, or dissatisfaction from customers.

1. Unauthorized collection of sensitive data

Conversational agents might inadvertently gather private or delicate data without adequate safeguards. This contravenes the Data Privacy Act and could prompt fines. While exploring free AI chat tools might seem appealing, businesses must ensure these solutions comply with local privacy regulations.

AI tools can unintentionally collect:

-

Names, addresses, device IDs

-

Payment information

-

Shopping history

-

Medical or personal preference data

A 2024 Cisco Data Privacy Benchmark Report found that 60% of organizations struggle to track how AI tools process personal data, meaning many companies may violate privacy laws without realizing it.

2. Lack of user consent

The NPC requires explicit consent before data is collected or analyzed by AI systems. If customers talk to a chatbot, they must be told:

Failure to do so can result in penalties under the Data Privacy Act.

3. Data breaches or leaks

In 2023–2024, the Philippines recorded multiple high-profile cybersecurity incidents, with over 1.3 million users affected by digital data breaches according to DICT reports.

If a chatbot leaks data due to weak encryption or cloud misconfiguration, the business is liable, not the AI vendor. This makes evaluating the risks and disadvantages of chatbots a critical step before deployment.

4. AI hallucinations and false information

Generative AI models (ChatGPT, Google Gemini, Meta LLaMA) sometimes produce incorrect or misleading answers.

Example risks:

-

Wrong return/refund instructions

-

False promo or pricing details

-

Medical or health-related misinformation

Under the Consumer Act, providing false information can lead to legal disputes with consumers.

Best practice: Implement human oversight for high-stakes queries. Use multi-agent live chat systems that escalate complex questions to human agents.

5. Discrimination and bias

If chatbots show different offers or pricing based on profile, location, or language, this may violate consumer rights and unfair trade regulations.

The UNESCO AI Ethics Guidance and NIST AI Risk Management Framework warn that AI systems can accidentally discriminate without proper auditing.

Leave a Comment

Your email address will not be published. Required fields are marked *

By submitting, you agree to receive helpful messages from Chatboq about your request. We do not sell data.