The market for unfiltered AI chatbot tools has matured significantly, offering businesses a range of solutions from API platforms to open-source frameworks. Here are the leading tools making waves in 2025 for creative experimentation, internal testing, and unrestricted content generation.

1. OpenAI API with Custom Parameters

OpenAI's API gives developers direct access to GPT models with adjustable safety parameters, allowing teams to reduce content filtering for approved internal use cases.

Key Features

- Direct access to GPT-4o, GPT-4 Turbo, and other OpenAI models

- Adjustable temperature, top_p, and frequency penalty parameters

- Custom system prompts for persona creation

- Fine-tuning capabilities for specialized applications

- Comprehensive API documentation and SDKs

- Moderation endpoint available when you need it

Best For

Development teams building custom applications, businesses prototyping conversational AI features, and organizations needing programmatic control over AI behavior.

Pricing

Pay-per-token usage. GPT-4o costs $2.50 per 1M input tokens and $10 per 1M output tokens. GPT-4o-mini costs $0.15 per 1M input tokens and $0.60 per 1M output tokens. Volume discounts available.

2. Anthropic Claude API

Anthropic's Claude API provides access to highly capable language models with granular control over behavior and safety settings for enterprise applications.

Key Features

- Access to Claude Sonnet 4.5, Claude Opus 4.1, and Claude Haiku models

- Constitutional AI framework for custom safety boundaries

- Extended context windows (up to 200K tokens)

- System prompts and prefill options for precise control

- Strong reasoning capabilities for complex tasks

- Workspaces for team collaboration

Best For

Enterprises needing sophisticated AI with customizable safety layers, teams handling complex reasoning tasks, and businesses requiring long-context understanding.

Pricing

Pay-per-token with tiered pricing. Claude Sonnet 4.5 at $3 per 1M input tokens and $15 per 1M output tokens. Claude Haiku 4.5 at $1 per 1M input tokens and $5 per 1M output tokens. Claude Opus 4.1 at $15 per 1M input tokens and $75 per 1M output tokens.

3. LM Studio

LM Studio enables businesses to run powerful open-source language models entirely on their own infrastructure with zero content filtering.

Key Features

- Run Llama 3, Mistral, Mixtral, Gemma, Qwen, DeepSeek, and other open-source LLMs locally.

- Complete data privacy – nothing ever leaves your systems

- No usage limits, API costs, or rate restrictions

- Full customization of model behavior and parameters

- Hardware acceleration support (GPU/Metal)

- Offline functionality for secure environments

- User-friendly interface with chat UI

Best For

Companies with strict data privacy requirements, development teams wanting complete control, security-conscious organizations, and businesses processing sensitive information.

Pricing

Free to use; only costs are hardware infrastructure (GPU-capable machines recommended for optimal performance).

4. Poe by Quora

Poe provides consumer and business access to multiple AI models, including Claude, GPT-4, and Gemini Pro, with the ability to create custom bots with varying levels of content filtering.

Key Features

- Access to multiple leading AI models in one interface (GPT-4, Claude 3, Gemini Pro, and more)

- Custom bot creation with personality customization

- Quick switching between different models

- Mobile and web access

- A bot marketplace for discovering community-created bots

- API access for developers

Best For

Teams experimenting with different AI models, content creators needing variety, businesses comparing model outputs, and users wanting easy multi-model access.

Pricing

Free tier available with limited daily messages. Poe subscription starting at $19.99/month (as of March 2025) for unlimited access to premium models.

5. Google AI Studio

Google's AI Studio provides browser-based access to Gemini models with adjustable safety settings for testing and experimentation.

Key Features

- Direct access to Gemini 1.5 Pro and Flash models

- Adjustable safety thresholds (including "Block none" option for stable models)

- Prompt tuning and testing interface

- System instructions for behavior customization

- Multimodal capabilities (text, images, audio, video)

- Free tier for experimentation

Best For

Teams quickly testing ideas, developers experimenting with multimodal AI, businesses evaluating Gemini capabilities before API integration, and prototyping conversational experiences.

Pricing

Free tier available with rate limits. Pay-per-token pricing for production use via Google Cloud Vertex AI.

6. Self-Hosted Ollama

Ollama is an open-source tool that makes running LLMs locally as simple as using Docker, with complete control over models and zero external dependencies.

Key Features

- One-command installation and model management

- Support for popular models (Llama 3, Mistral, CodeLlama, Phi, Gemma, and more)

- REST API for easy integration into applications

- Model customization via Modelfiles

- GPU acceleration support

- Community-driven model library

- Cross-platform support (macOS, Linux, Windows)

Best For

DevOps teams are comfortable with self-hosting, organizations requiring air-gapped deployments, developers building local-first applications, and teams wanting zero ongoing costs.

Pricing

Completely free and open-source; requires appropriate hardware (GPU recommended but not required).

Comparison Table: Which Tool Should You Choose?

|

Tool

|

Best For

|

Key Features

|

Pricing

|

|

OpenAI API

|

Custom apps, AI prototyping

|

GPT-4o/4 Turbo, adjustable parameters, custom prompts, fine-tuning

|

Pay-per-token: $2.50/1M input, $10/1M output (GPT-4o)

|

|

Anthropic Claude API

|

Enterprise AI, long-context tasks

|

Claude models, Constitutional AI, extended context, system prompts

|

Pay-per-token: $1–$75 per 1M tokens, depending on model

|

|

LM Studio

|

Privacy-focused companies

|

Run LLMs locally, full control, offline, GPU support

|

Free (hardware costs only)

|

|

Poe by Quora

|

Multi-model experiments

|

GPT-4, Claude, Gemini, custom bots, bot marketplace

|

Free tier; $19.99/month for premium

|

|

Google AI Studio

|

Rapid prototyping, multimodal AI

|

Gemini models, adjustable safety, prompt tuning, multimodal

|

Free tier; pay-per-token for production

|

|

Self-Hosted Ollama

|

DevOps/local-first teams

|

One-command install, REST API, multiple models, GPU support

|

Free & open-source; hardware recommended

|

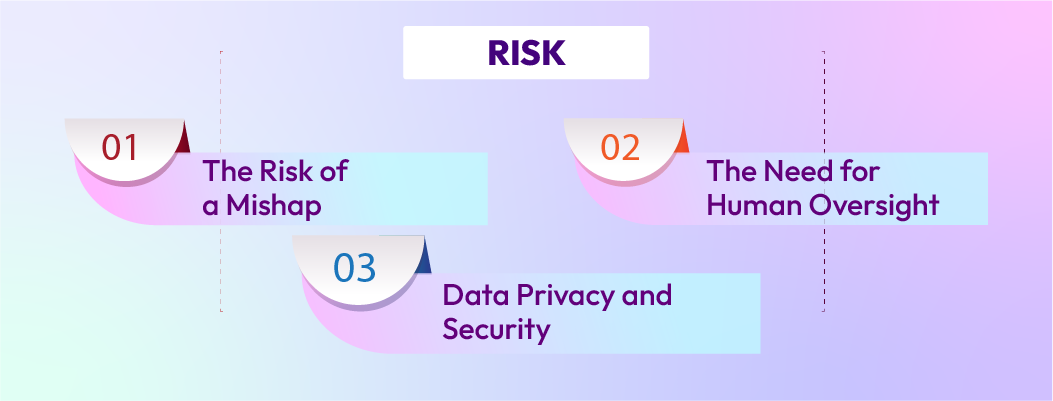

Key Considerations When Selecting Your Tool

As you evaluate these options, prioritize these factors:

Use Case Alignment:

- Internal creative testing vs. production applications

- Single model vs. multi-model experimentation

- Simple prompting vs. fine-tuning requirements

- Public-facing vs. internal-only usage

Technical Requirements:

- API integration vs. self-hosted deployment

- DevOps capabilities within your team

- Hardware availability for local models

- Development resources for custom implementations

Budget and Pricing:

- Upfront infrastructure costs vs. ongoing API expenses

- Expected usage volume and cost projections

- Free tiers for testing and prototyping

- Long-term scalability costs

Data Privacy and Security:

- Sensitivity of data being processed

- Compliance requirements (GDPR, HIPAA, SOC 2, etc.)

- On-premise vs. cloud deployment needs

- Data retention and training policies

Scalability and Performance:

- Expected request volume and concurrency

- Latency requirements for your use case

- Need for guaranteed uptime and SLAs

- Geographic distribution requirements

Safety and Control:

- Required level of content filtering

- Ability to implement custom safety layers

- Human review workflow requirements

- Legal and compliance considerations

Leave a Comment

Your email address will not be published. Required fields are marked *

By submitting, you agree to receive helpful messages from Chatboq about your request. We do not sell data.